The elastic query feature in Azure SQL Database allows you to run SQL queries that span multiple Azure SQL Databases, enabling the sort of cross-database queries in PaaS that you might take for granted in a SQL Managed Instance or in a SQL Server VM.

However, we came across a potential pitfall recently of running cross-database queries on Azure SQL Database. It affected one of our customers in a significant way so it’s worth reviewing if you’re delving into this scenario. In this post we’ll explore a typical cross-database query and show how in even the simplest of cases, you could max out the DTU on your production Azure SQL Database.

To set up the scenario, we’ll be loosely following Microsoft’s documented example for setting up a cross-database query across two Azure SQL Databases. However, we’ll modify this slightly to increase the number of rows in each table.

How to create an external table in Azure SQL Database

Step 1, create two Azure SQL Databases

Customers and Orders

Step 2, create two tables and load some data

Execute this code in the Customers database to create a table and insert a row:

create table [dbo].[CustomerInformation]( [CustomerID] [int] not null identity, [CustomerName] [varchar](50) null, [Company] [varchar](50) null constraint [CustID] primary key clustered ([CustomerID] asc) ) insert into CustomerInformation select 'Jack', 'ABC' from sys.objects O cross join sys.columns C

Execute the next code in the Orders database to create a single table with around 120k rows:

create table [dbo].[OrderInformation]( [OrderID] [int] not null identity, [CustomerID] [int] not null constraint OrdId primary key clustered (OrderId asc) ) insert into OrderInformation select o.[object_id] from sys.objects O cross join sys.columns C

Step 3, create a database scoped credential

In the Orders database, create a database scoped credential:

create master key encryption by password = '<master_key_password>'; create database scoped credential ElasticDBQueryCred with identity = '<username>', secret = '<password>'; go

Step 4, create an external data source using the credential:

In the Orders database, create an external data source:

create external data source MyElasticDBQueryDataSrc with (type = rdbms, location = '<server_name>.database.windows.net', database_name = 'Customers', credential = ElasticDBQueryCred, ) ; go

Step 5, create an external table using the external data source

In the Orders database, create an external table:

create external table [dbo].[CustomerInformation] ( [CustomerID] [int] not null, [CustomerName] [varchar](50) not null, [Company] [varchar](50) not null) with ( data_source = MyElasticDBQueryDataSrc)

How to query an external table in Azure SQL Database

Now that you’ve set up the external table, you’ll be able to query the table CustomerInformation from the Orders database. It is worth noting that the external table we’ve just created does exist in the local database’s sys.objects DMV. However, when you query this table, behind the scenes the optimizer is running a remote query against the “real” table in the Customers database.

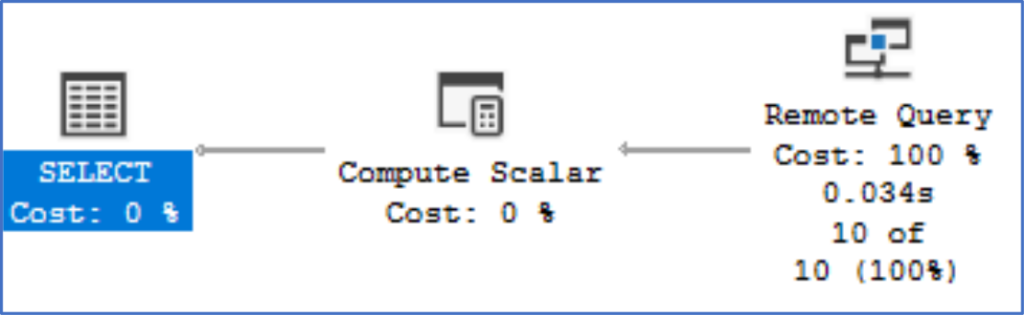

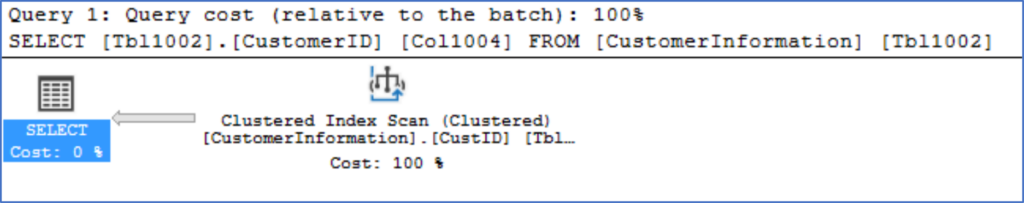

We can see this when running the following simple query and reviewing the associated execution plan:

select top 10 * from CustomerInformation

You can even join between local tables and the external table, which is a common use-case for cross-database querying. Unfortunately, this is where some problems can arise.

Why do external tables max out DTUs?

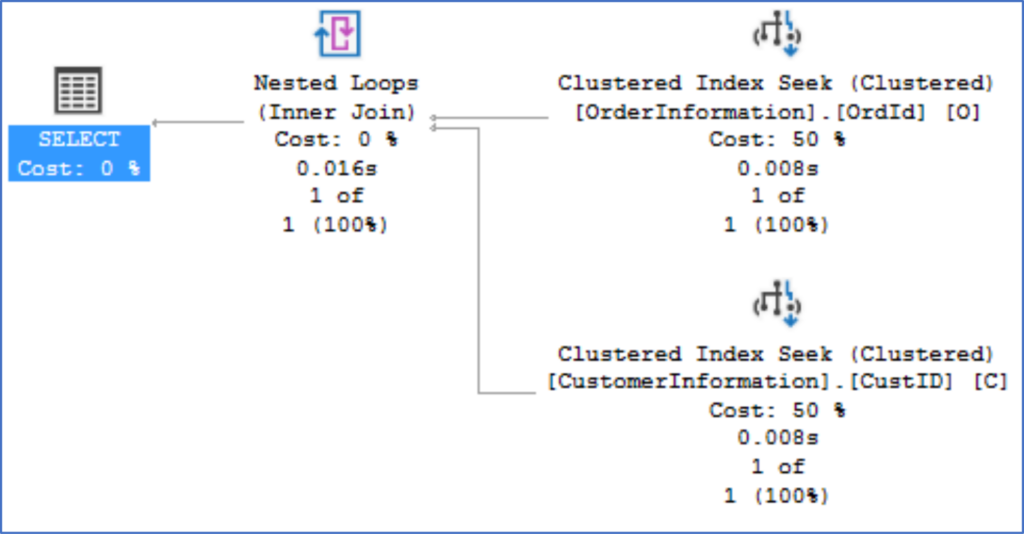

Consider the following cross-database join between CustomerInformation and OrderInformation:

select * from OrderInformation O inner join CustomerInformation C on C.CustomerID = O.CustomerID where OrderId = 25

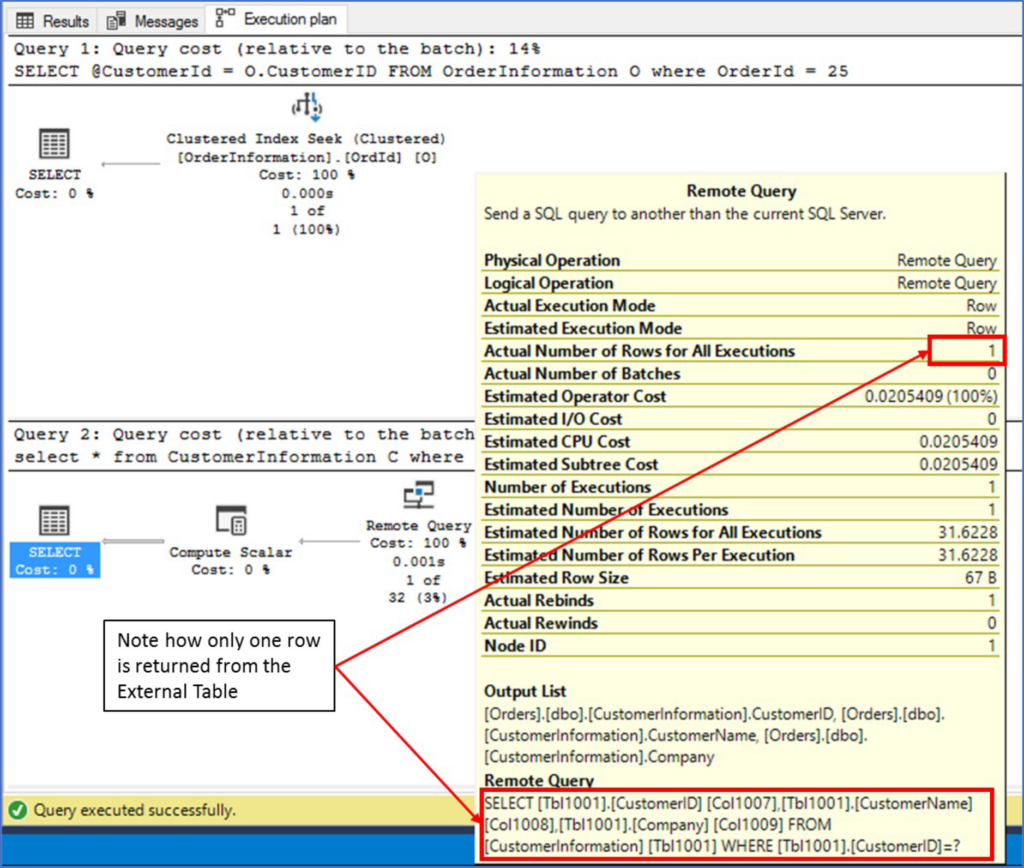

The above query returns one row and, as both tables are appropriately indexed, you’d expect to see a couple of clustered index seeks. In fact, if you were to run a similar cross-database query on SQL Server or Azure SQL Managed Instance, this is exactly what you would find. See the execution plan below:

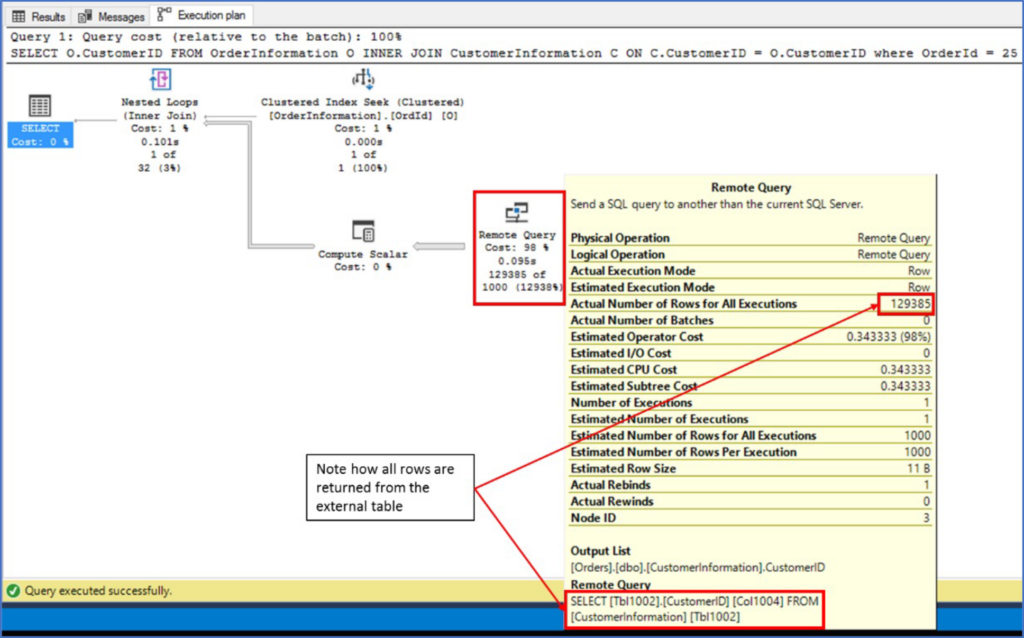

However, on Azure SQL Database we see the following execution plan:

On Azure SQL Database we see the use of a Remote Query operator and if we dig into the details of this operator, we can see that the entire contents of CustomerInformation are being retrieved before this is joined to OrderInformation.

This is quite significant because it means that every time we join on our external table, the entire contents of the external table are retrieved. Now, imagine that our external table has 2 million rows and that we have many such cross-database queries executing per minute!

This is exactly the situation one of our customers found itself in. Our monitoring alerted us to excessively high DTU in the Customers database and a quick exploration of the Query Store indicated that one query was responsible for almost all logical reads and CPU activity in the preceding ten minutes, which we can see below in the execution plan found within the Query Store of the Customers database.

There are a couple of important points to take from this and are worth mentioning. Firstly, a user might be running a query in the Orders databases, but the actual load is happening in a completely different database (it could even be on a completely different server). Secondly, all you can see in the database that is affected by this load is the remote query that is being run behind the scenes (as we see in the above image). This tells as very little about what the actual user query that is being run on the Orders database looks like.

Luckily, we had a hunch that this query was part of a remote query and, as we only had one database (Orders) that was configured with external tables, we queried the Query Store in the Orders database, trying to identify query plans that contained the above query.

How to search the Query Store for a query text

For this we ran the following query in the Orders database:

select * from sys.query_store_plan where query_plan like '%SELECT ![Tbl1002!].![CustomerID!] ![Col1004!] FROM ![CustomerInformation!] ![Tbl1002!]%' ESCAPE '!'

In the above case there was only one plan that contained the remote query text so having now isolated the originating query in the Orders database we were able to make some modifications to prevent this from happening.

How to avoid external tables maxing out your DTUs

First, it is important to recognise the limitation in the way that external tables offer cross-database functionality. It appears that filtering with a WHERE clause will use any indexes that exist on the table but filtering with JOINS does not. So, try to filter queries to external tables with the use of a WHERE clause. For example, we can adapt our earlier join into two separate queries as follows:

declare @CustomerId int; select @CustomerId = O.CustomerID from OrderInformation O where OrderId = 25 select * from CustomerInformation C where CustomerId = @CustomerId;

From the resulting execution plan, we can see that the filter is now applied when querying the external table, and only one row is returned:

Beyond this, really think about your database design and try to keep tables that you think you need to join within the same database. Naturally, this isn’t always going to be possible so if you really do need cross-database functionality, try to avoid joining tables across different databases and where you must join, try to only do so with small tables, thus limiting the rows that need to be returned from external tables.

Be open to alternative solutions, like Azure SQL Managed Instance or SQL Server in a VM, which have better native support for cross-database querying. Or you could consider importing data from other databases using SQL Data Sync for Azure.